3 Tips for Reliable and Valid Assessment in the Maritime

Jan 20, 2016 Murray Goldberg 0 AssessmentThe following post is part of a series of articles originally written by Murray Goldberg for Maritime Professional (found here). We have decided to re-post the entire series based on reader feedback.

Introduction

If you have been following along, this is the fifth article in my series on officer and crew member assessment in the maritime industry. This is a topic where there is much to say, and one for which there has been a significant amount of interest. Although I know I will revisit this topic many times in the future, for now I am going to close off the series with two more articles.

This article is intended to “put it all together” and present a list of good techniques and practices (along with supporting arguments) that contribute to valid and reliable assessment in the maritime industry.

Goal: Achieve Comprehensive, Valid and Reliable Assessment

Before we can discuss tips to help achieve our goal, let’s first define our goal for assessment. It may be defined many ways, but I believe one reasonable definition to be “comprehensive, reliable and valid assessment”.

Although reliability and validity were defined in a previous article, let’s first quickly remind ourselves what is meant by Valid and Reliable assessment.

An assessment technique is reliable if it yields the same results consistently, time after time, given the same input (in this case, the same knowledge or skill level).

An assessment technique is valid if it measures the thing it is supposed to measure, and if it yields a correct measurement of that thing. Taking the bathroom scale example as an assessment technique, the scale is reliable if it always gives me the same weight (assuming my weight does not change). It is valid if it shows my correct weight.

Ideally, our assessments of officers and crew should be reliable, valid, and as comprehensive as possible – measuring as much as is reasonably possible about their ability to perform their duties safely under all conditions. This is our goal. The question is, how do we achieve this goal?

Tip 1: Use a Combination of Assessment Techniques

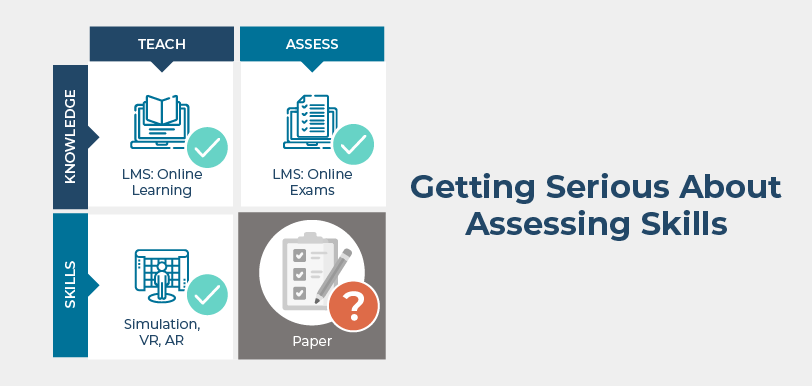

We discussed a variety of assessment techniques in past articles of this series. Each technique has strengths and weaknesses. For example, some techniques are excellent at testing knowledge. Other techniques are very good at testing skills. Some techniques are highly objective (reliable), while others are important, but much more subjective (less reliable).

Each technique offers us a tool which can be used to great effect, but no single technique is comprehensive. Therefore, by employing a combination of techniques with regard to the strength of each, we can achieve a much better overall assessment than by employing any one technique alone.

Example 1: Combining Techniques to Improve Reliability and Comprehensiveness

For example, demonstrative examinations are very common in the maritime industry. An examiner may ask the candidate to, for example, don a firefighting suit. This is a critically important skill and must be assessed. Asking the candidate to do it is a good way to test the skill. However, while we would never consider eliminating such a test, the test is incomplete in at least two ways:

- It is highly subjective (not reliable). Different assessors will grade “harder” or “easier” and give different scores for the same performance.

- The test is not comprehensive. Even though it does provide very useful information, it cannot fully test their knowledge of, or skill with the equipment especially under varying or extreme conditions.

We can improve the assessment reliability and comprehensiveness by combining this demonstrative exam with a different kind of assessment technique which is more comprehensive and more objective. For example, if we also ask the candidate to take a written, multiple choice exam covering the same materials, we would now have additional insight into the depth of the candidate’s knowledge of the firefighting equipment. This is important, because deep knowledge is critical in being able to make decisions when presented with unexpected or emergency situations. In addition, because multiple choice exams are highly objective, they would add reliability to the assessment process.

Example 2: Combining Techniques for Specific Assessment Goals

As a second example, there are specific types of assessments for specific assessment goals. Using a combination of assessment techniques allows you to cover the goals more precisely. For example – you may recall the article on attitude assessment. It is difficult to assess attitude using demonstrative or “normal” written exams. However, it can be assessed using 360 degree evaluations or certain kinds of psychological testing.

Likewise, assessing knowledge and skills typically requires very different assessment techniques. Use any one technique alone and you are missing part of the picture. If you use a combination of techniques – for example:

- Demonstrative assessments to assess basic skills,

- Written assessments to assess knowledge, and

- 360 assessments to assess attitude

then you are more directly targeting each of your varying assessment goals.

So, while we could use any one type of assessment alone, by combining multiple techniques we more directly target each assessment goal, and we improve the objectivity (and therefore reliability and validity) of our assessments. Not only are we using “the right tool for the right job”, but we are creating a larger and more varied basket of data on which we can form a final assessment decision of the candidate.

Tip 2: Separate The Trainer from the Assessor

If your trainer and assessor are the same person, you may be making a mistake. They should be separate people.

It is the job of the trainer to ensure a candidate has all the required skills and knowledge for the job at hand. The trainer is there to support, instruct, and provide resources. The trainer should be a “safe” resource for the candidate. The candidate should not feel any judgement when they ask questions or practice skills. The assessor has a different job – that of ensuring that no candidate is assigned a job duty they are not prepared for.

Think of the trainer as a producer and the assessor as a consumer/buyer. It is the job of the trainer to produce qualified candidates. It is the job of the assessor to critically appraise the fitness of these candidates for consumption. If the producer and consumer are the same people, then we have a conflict. Most notably:

- If the trainer knows the exact nature of the assessment that follows, human nature will cause him/her to “train to the test”. It will reflect well on the trainer if the candidate performs well in the assessment. The problem is that assessments can never be comprehensive. Therefore training must always assume *everything* will be tested, even though this is never the case. By separating trainer and assessor (and keeping knowledge of specific assessment details from the trainer) then we provide a strong incentive for comprehensive training.

- If the assessor is also the person who performed the training, then they are in a conflicting position because failure of the candidate reflects poorly on their ability as a trainer. Likewise, the relationship they establish with the candidate during training may cause them to be less objective when it comes time for assessment. This is important in the maritime industry where many of the skill-based assessments are highly subjective in nature.

- If the trainer and assessor are the same person, then there is no clear line between training and assessment. There should be. If not, the candidate will feel (correctly) that they are being assessed during training. This may make them reluctant to ask important questions, ask for clarifications, or ask for more practice time for fear that it will reflect badly on their knowledge or abilities. This greatly undermines the effectiveness of the training period.

Tip 3: Use Rubrics

As I mentioned above, it is difficult to test skills objectively, and skills are an important part of maritime assessment. We can improve the objectivity of skill assessment by using something called “rubrics”.

A rubric is simply a written guide for assigning grades on an assessment. It provides instructions to the assessor on how to grade. If we look back to the example of donning a firefighting suit, a couple rubrics might look like the following:

| Speed in donning the suit |

|

| Skill and Proficiency |

|

If you compare the use of the rubrics, above, to less-guided alternative questions such as:

The candidate demonstrates the ability to don the firefighting suit and related gear: Yes/No

then you probably agree that the use of rubrics will not only provide a much more reliable and objective assessment outcome, but will also provide a lot more data for use in making a final assessment decision.

Conclusion

We have thus far discussed 3 of the 6 tips in this article on maritime industry assessment. This article is already too long (congratulations to those of you who have made it this far). Therefore, I will publish the remainder of the 6 tips in the next post.

If you would like to be informed of this article when it comes out (and have not already signed up to receive article notifications), please follow the blog below and you’ll receive a notification of each upcoming article.

Until then – have a wonderful day and thanks for reading!

Follow this Blog!

Receive email notifications whenever a new maritime training article is posted. Enter your email address below:

Interested in Marine Learning Systems?

Contact us here to learn how you can upgrade your training delivery and management process to achieve superior safety and crew performance.